The Washington Post looking into the data sets Google uses to train its chatbots and some surprising results popped up:

As The Post put it:

To look inside this black box, we analyzed Google’s C4 data set, a massive snapshot of the contents of 15 million websites that have been used to instruct some high-profile English-language AIs, called large language models, including Google’s T5 and Facebook’s LLaMA. (OpenAI does not disclose what datasets it uses to train the models backing its popular chatbot, ChatGPT)

The Post worked with researchers at the Allen Institute for AI on this investigation and categorized the websites using data from Similarweb, a web analytics company. About a third of the websites could not be categorized, mostly because they no longer appear on the internet. Those are not shown.

We then ranked the remaining 10 million websites based on how many “tokens” appeared from each in the data set. Tokens are small bits of text used to process disorganized information — typically a word or phrase.

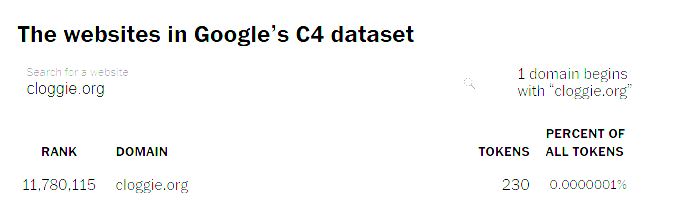

Because the article kindly included a search bar I found out my website is also included in the data set, with some 230 tokens. It would be interesting to see what exactly was included in those 230 tokens from the more than two decades of rambling contained in this site. Sadly, that’s not provided here. Nevertheless, an interesting look in the data used in training socalled AI programmes and its limitations.

No Comments